Table of Contents

For many teams, vCluster’s virtual cluster model is a game-changer—it delivers stronger tenant isolation than namespaces without the operational overhead of spinning up full Kubernetes clusters per team or tenant. Resources can even be shared between virtual clusters from the host cluster, making scaling across tenants effortless. But when hosting untrusted or external tenants, shared infrastructure only goes so far.

When platform teams build shared Kubernetes environments, no two tenants are exactly the same. Some teams need strong isolation for compliance. Others care more about efficiency and resource sharing. And almost all need something flexible enough to grow with them over time.

That’s why the future of Kubernetes multi-tenancy can’t be a one-size-fits-all solution. It needs to support multiple tenancy modes—and let you mix and match them as needed.

With vCluster, that’s exactly what we’re enabling.

Why Tenancy Mode Flexibility Matters

In traditional setups, you’re often locked into one tenancy model per cluster:

- Shared nodes maximize utilization, but come with security and performance trade-offs.

- Dedicated nodes improve isolation but require careful provisioning to avoid resource waste.

- Private clusters per tenant offer the strongest boundaries—but at the cost of operational complexity and wasted resources.

This rigidity forces teams to choose between efficiency, isolation, and scalability. But real-world workloads don’t stay static. Your infrastructure shouldn’t either.

The Three Modes of vCluster Tenancy

We designed vCluster to support three distinct tenancy modes, all within a single Kubernetes-native platform–shared nodes, dedicated nodes, and private nodes:

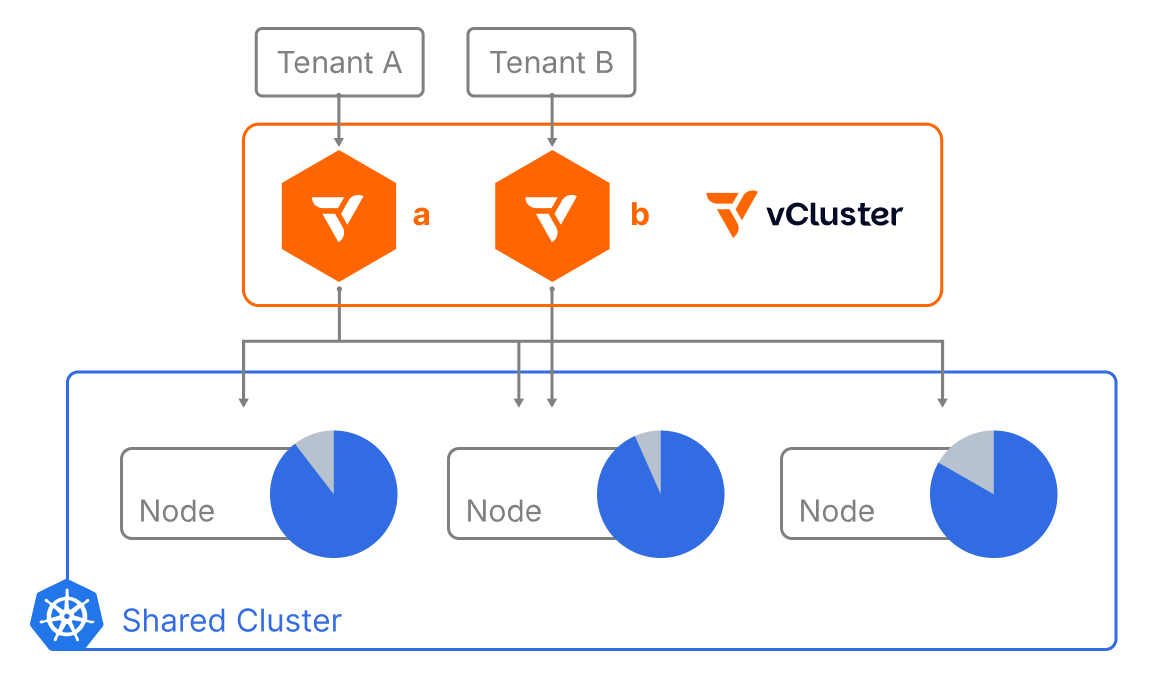

1. Shared Nodes

Tenants share the same underlying nodes, with their workloads colocated. This model is ideal for environments where maximizing GPU/CPU utilization is key. This is the most common way of using vCluster, and the scenario it was originally built for.

Most vCluster deployments sync workloads into a shared host cluster, enabling efficient multi-tenancy and dynamic scheduling with the vCluster syncer. For internal teams with moderate trust boundaries, this is ideal: isolated control planes, shared resources, optimized utilization, and minimal waste.

Each tenant has their own isolated control plane with full control over CRDs and namespaces. However, instead of joining completely dedicated nodes into separate clusters for each tenant, vCluster supports dynamically syncing resources across multiple nodes.

Sharing resources across nodes keeps all of the nodes in the cluster optimized, so no resources go to waste because schedulers can make use of all nodes when distributing work. Things like CNI and CSI are also shared and require policies to be in place for proper isolation.

vNode was launched earlier this year to prevent situations where `vcluster-a` from the diagram above and `vcluster-b` won’t run on the same node without any protection from things like container breakouts–which can happen. vNode isolates the tenants at the node level providing an extra layer of safety.

- 🟢 Pros: High efficiency, minimal overhead

- 🔴 Trade-offs: Requires strict policies (CNI, CSI, etc.) and tools like vNode to isolate containers on the node level

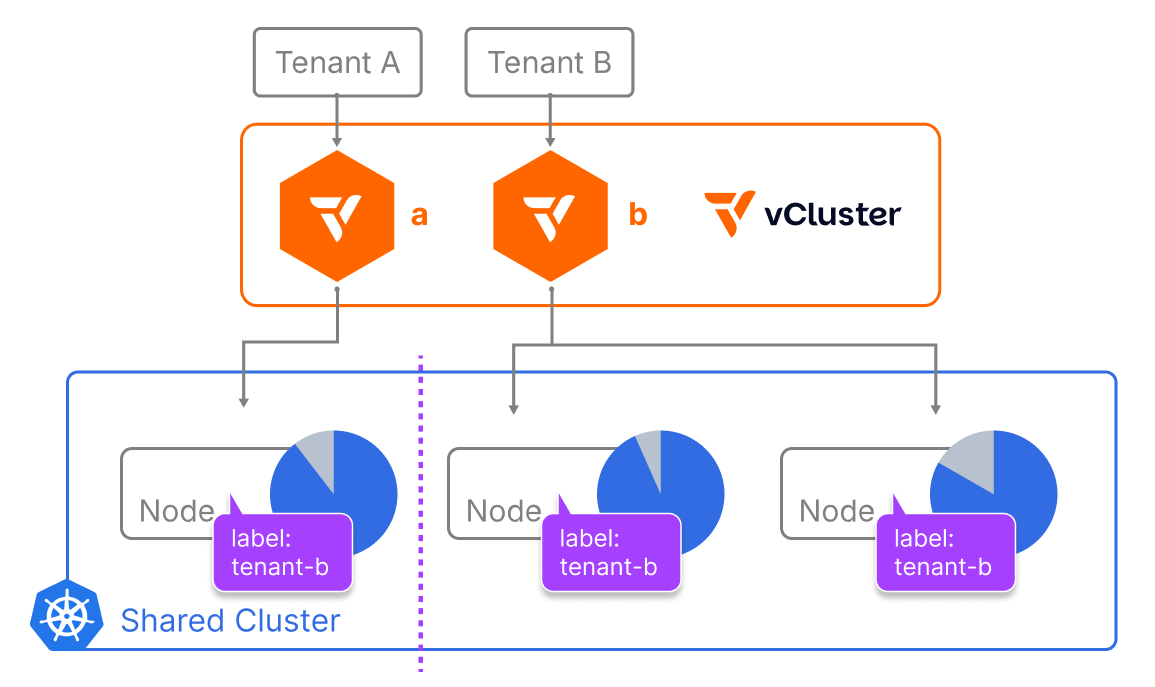

2. Dedicated Nodes

Another option in addition to vNode is to use vCluster with dedicated nodes. This is where each tenant is assigned specific nodes, using label selectors to determine on which node each tenant’s workloads will reside. This provides stronger boundaries while still keeping clusters shared.

Teams running their customer’s production-grade workloads use this dedicated nodes mode to run their virtual clusters on the same EKS cluster, for example, but ensuring that each of their customers are running on their own nodes inside that cluster.

The one thing to keep in mind with dedicated nodes is that networking and storage are still shared.

- 🟢 Pros: Better isolation; easier to enforce quotas and guarantees

- 🔴 Trade-offs: Can still lead to unused capacity if node allocation isn’t balanced

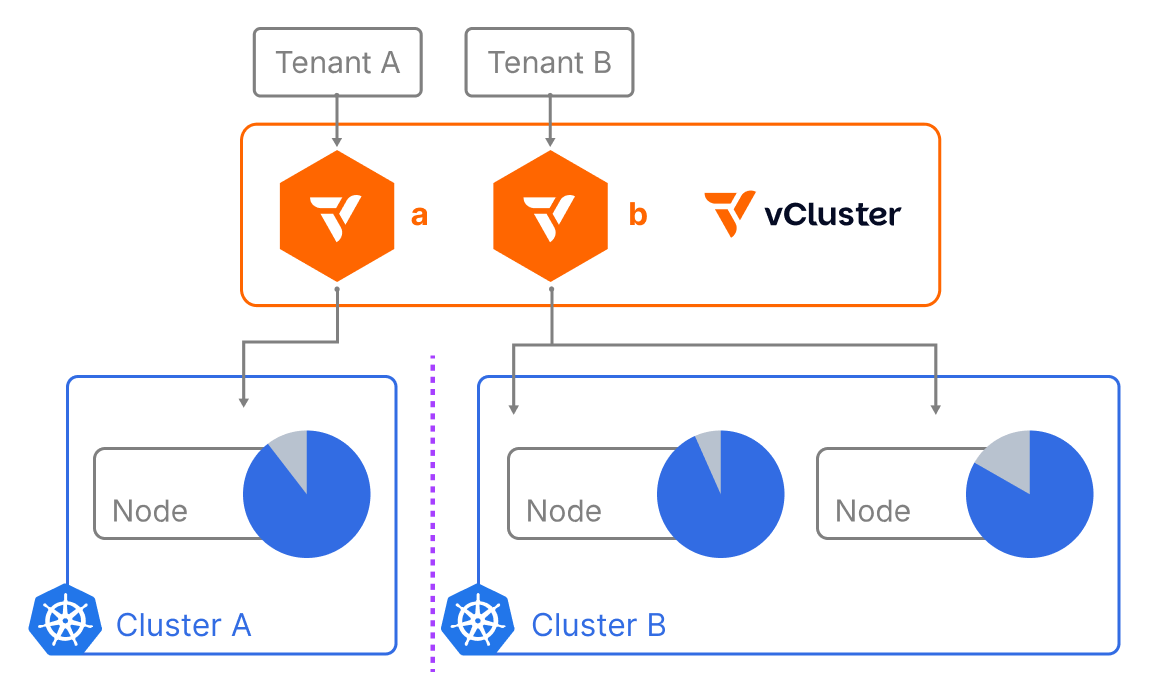

3. Private Nodes (Coming Soon)

Private nodes go one step further than dedicated nodes. With private nodes each tenant runs in its own logically separate cluster, with isolated control and data planes. Private nodes are completely private. They’re not visible for any other tenant outside of the virtual cluster.

These nodes are directly joined into a vCluster control plane, just as you would join them into another regular Kubernetes cluster. That means they have completely separate networking and storage. This effectively makes the virtual cluster a regular cluster with a private node while still running the vCluster control plane as containers, which makes it more efficient to run.

This mode offers maximum separation—ideal for highly regulated or performance-sensitive environments.

- 🟢 Pros: Full isolation of control plane, CRDs, network, and storage

- 🔴 Trade-offs: Operationally heavier without a dynamic platform to manage node allocation and scale

Why This Matters Now

Tenancy requirements are no longer static. A startup might be fine with shared infrastructure today, but need dedicated control tomorrow. Or a team would use shared nodes for running development environments. They may not even need vNode for that use case. But, when they’re running their CI processes and need privileged containers to do image building, vNode would provide that extra layer of protection. Then, to run internal production environments dedicated nodes would make sense. And, finally, if you’re a cloud provider and want to offer Kubernetes clusters to your customers, private nodes would be the mode that would meet your needs.

With vCluster, you don’t have to choose just one approach. You can run shared, dedicated, or private node tenancy—and even combine them across environments—all with Kubernetes-native tooling.

What’s Coming Next

Private nodes will be a game-changer for teams that need strong boundaries without giving up flexibility. Keep your eyes on the Future of Kubernetes Tenancy Launch Series for more news, where we’ll dive into how private nodes work under the hood—and how they make the third mode of tenancy truly viable at scale.

The private nodes feature will go live on August 12, 2025. And, don’t miss our co-founders Lukas Gentele and Fabian Kramm as they discuss private nodes on our v0.27 Private Nodes webinar on August 14.

.jpeg)

.png)

.png)

.png)