Table of Contents

In the world of cloud-native computing, bare metal Kubernetes represents an increasingly compelling option for organizations seeking maximum performance without the virtualization tax.

But how do we manage multitenancy in these environments? Let’s explore the challenges and solutions, with a particular focus on the open source vCluster project.

Are Virtual Machines the Only Way?

Virtual machines have long been considered the only reliable isolation method for multi-tenant Kubernetes. This assumption forces an impossible choice: sacrifice bare metal performance or compromise security.

But what if we could achieve strong isolation without the VM tax? Recent Kubernetes-native technologies enable isolation at multiple layers — control plane, network, node, and container runtime — creating security boundaries that rival VMs without their performance penalties.

For GPU workloads especially, eliminating VMs means direct hardware access with dramatically lower latency while maintaining strict tenant isolation.

The Case for Bare Metal Kubernetes

Running Kubernetes directly on physical hardware delivers substantial benefits:

The numbers speak for themselves:

Performance: Up to 30% better container performance compared to VMs according to Gcore’s benchmarks

Latency: Network operations can be up to 3x faster without a hypervisor, as reported by Equinix Metal

Resource utilization: No resources wasted on hypervisor overhead, notes SpectroCloud

Cost efficiency: PortWorx found potential 30% reduction in total cost of ownership for stable workloads

For GPU-intensive workloads like machine learning and AI inference, the benefits become even more pronounced. Direct access to GPUs without virtualization overhead means faster training times and more efficient inference workloads, especially important for LLM serving, according to NVIDIA.

The Multitenancy Challenge

However, implementing secure multitenancy on bare metal Kubernetes presents unique challenges:

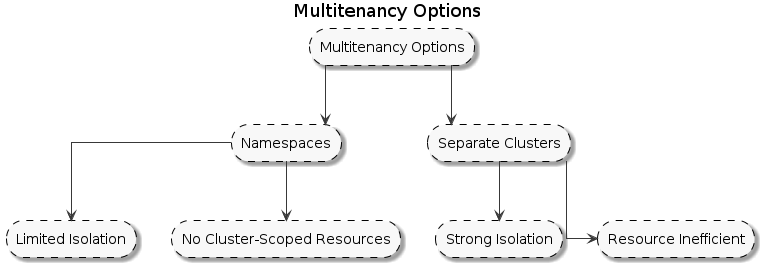

Traditional Kubernetes provides two primary methods for multitenancy:

Namespace-based isolation: Simple but limited. Tenants can’t manage their own CRDs, RBAC, or other cluster-wide resources.

apiVersion: v1

kind: Namespace

metadata:

name: tenant-a

labels:

tenant: tenant-a

---

# Limited resource quotas

apiVersion: v1

kind: ResourceQuota

metadata:

name: tenant-quota

namespace: tenant-a

spec:

hard:

cpu: "4"

memory: 8GiSeparate physical clusters: Secure but expensive and inefficient.

Neither approach is ideal, especially on bare-metal where you want both the security of separate clusters and the efficiency of shared infrastructure.

Enter vCluster: Virtual Kubernetes Clusters

This is where vCluster comes in — an open source solution that creates virtual Kubernetes clusters within a single host cluster.

Each virtual cluster has its own Kubernetes control plane and other components running in a pod inside a namespace, install it for example with helm.

# install vCluster using Helm

helm upgrade --install my-vcluster vcluster \

--values vcluster.yaml \

--repo https://charts.loft.sh \

--namespace team-x \

--repository-config='' \

--create-namespace

# Connect to your virtual cluster

vcluster connect my-vcluster -n team-xYou can find detailed installation instructions and options in the vCluster deployment documentation.

The key advantages of vCluster for bare metal environments include:

Full cluster capabilities: Tenants have their own API server, control plane, and can manage CRDs

Lightweight implementation: Uses k3s or k0s by default, requiring minimal resources

Version flexibility: Support for different Kubernetes versions for each tenant

Resource efficiency: All virtual clusters share the same physical infrastructure

A typical values file for a virtual cluster might look like this:

# vcluster.yaml

controlPlane:

distro:

k8s:

enabled: true

version: "v1.32.0"

policies:

resourceQuota:

enabled: true

quota:

cpu: "10"

memory: 2Gi

pods: "10"Container Isolation: The Missing Piece

While vCluster provides excellent control plane isolation, container runtime security remains a challenge, especially on bare metal. Several approaches exist:

Standard container isolation: Basic namespaces, cgroups, and capabilities

gVisor: Application kernel that intercepts syscalls (see gVisor documentation)

Kata Containers: Uses lightweight VMs for stronger isolation (see Kata documentation)

RuntimeClass: Kubernetes mechanism to select different container runtimes

Each of these solutions comes with trade-offs between security, performance, and compatibility.

An emerging solution in this space is vNode, which provides strong isolation at the node level using Linux user namespaces. As detailed in the vNode architecture documentation, it isolates workloads directly at the node level while maintaining the performance advantages of bare metal. Unlike solutions that rely on VMs or syscall translation, vNode is container-native and designed specifically for Kubernetes environments.

Putting It All Together

By combining vCluster for control plane isolation with appropriate container isolation strategies like vNode, organizations can build secure, efficient multi-tenant environments on bare metal Kubernetes:

Control plane isolation: vCluster provides tenant separation at the API level

Resource allocation: Use Kubernetes resource quotas and limits

Network isolation: Implement network policies with CNI plugins like Cilium

Node-level isolation: Leverage container runtime security solutions

This layered approach ensures that both the control plane and the workloads themselves are properly isolated, even when running on shared physical infrastructure.

Practical Example: Multi-tenant ML Platform

Let’s look at a practical example — a multi-tenant machine learning platform running on bare metal Kubernetes:

# Create a values file for the data science team's virtual cluster

cat > team-a-vcluster.yaml <<EOF

controlPlane:

distro:

k8s:

enabled: true

version: "v1.32.0"

policies:

resourceQuota:

enabled: true

quota:

cpu: "10"

memory: 2Gi

pods: "10"

EOF# Install the virtual cluster

helm upgrade --install team-a-vcluster vcluster \

--values team-a-vcluster.yaml \

--repo https://charts.loft.sh \

--namespace team-a \

--repository-config='' \

--create-namespace

# Connect to the virtual cluster

vcluster connect team-a-vcluster -n team-a

# Deploy ML workloads in the isolated environment

kubectl apply -f ml-training-job.yamlEach data science team gets their own virtual Kubernetes cluster with dedicated GPU resources, while the underlying infrastructure remains efficiently utilized. This approach is already being adopted by organizations running large-scale AI workloads on bare metal Kubernetes, as shown in the Box case study.

Conclusion

Bare metal Kubernetes with vCluster offers a compelling solution for organizations seeking maximum performance without sacrificing multitenancy. By leveraging open source tools like vCluster and complementing them with appropriate container isolation mechanisms, you can build environments that are both high-performing and properly isolated.

As the ecosystem evolves with solutions like vNode that provide strong isolation without the performance penalties of VMs, the case for virtualizing Kubernetes on bare metal becomes even stronger.

Thanks for taking the time to read this post. I hope you found it interesting and informative.

.png)

.png)